UCL Connected Environments

Featured projects

Recent Posts

UCL is accepting applications for the EPSRC Landscape Award 2026/27. 50 fully-funded four-year PhD studentships

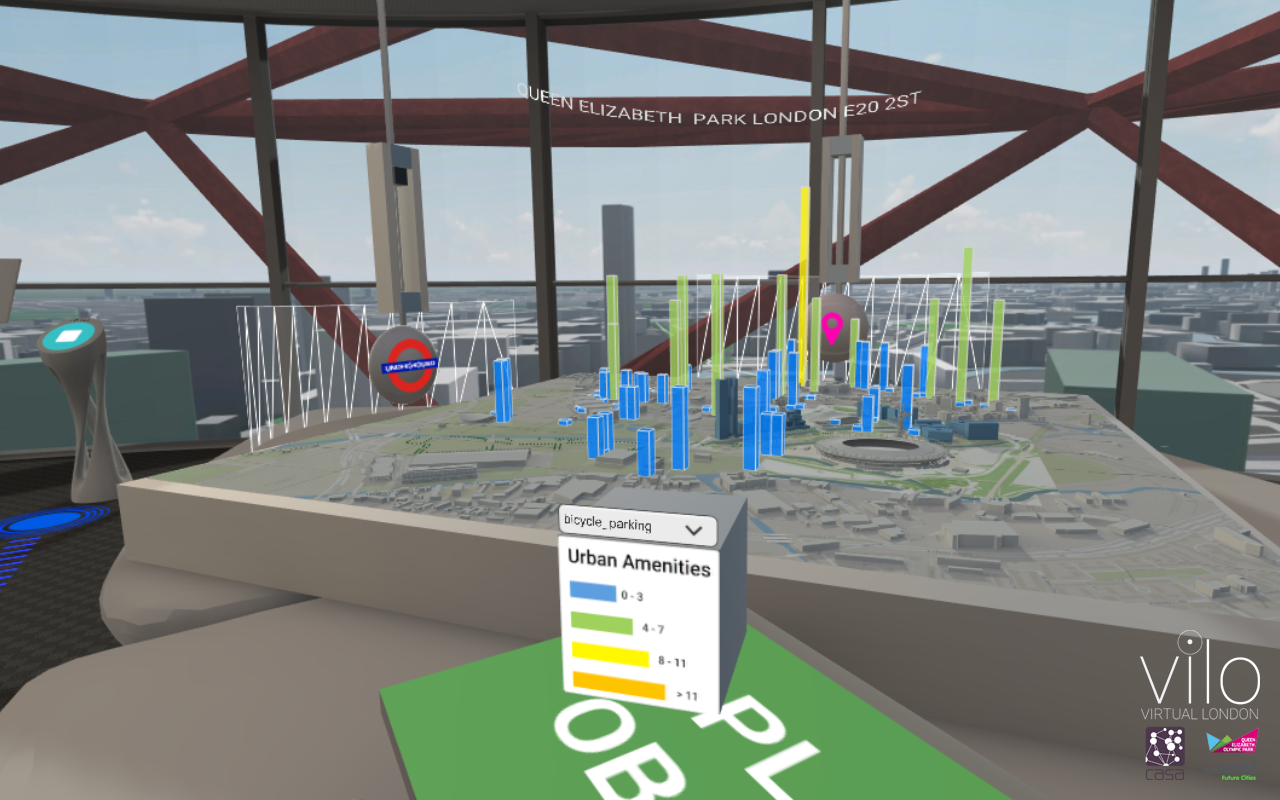

New Book: Cities in the Metaverse

Our annual exhibition for the MSc Connected Environments at UCL East took place from 17 July to 3 August 2025 at UCL Marshgate. Missed it? Don't worry, we've got you covered.

Background and details of how to Make your own 3D Printed Clock, part of a Summer Project at Connected Environments

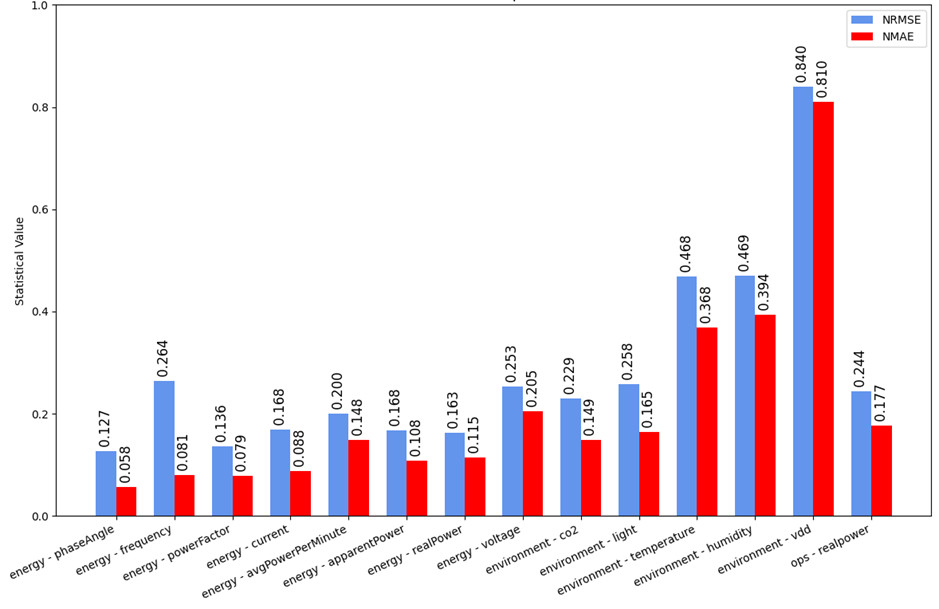

A deep dive into the use of large language models (LLMs) for time series analysis, benchmarking Chronos, LLM4TS, and TEMPO on real-world building datasets, and exploring their potential for forecasting and anomaly detection.

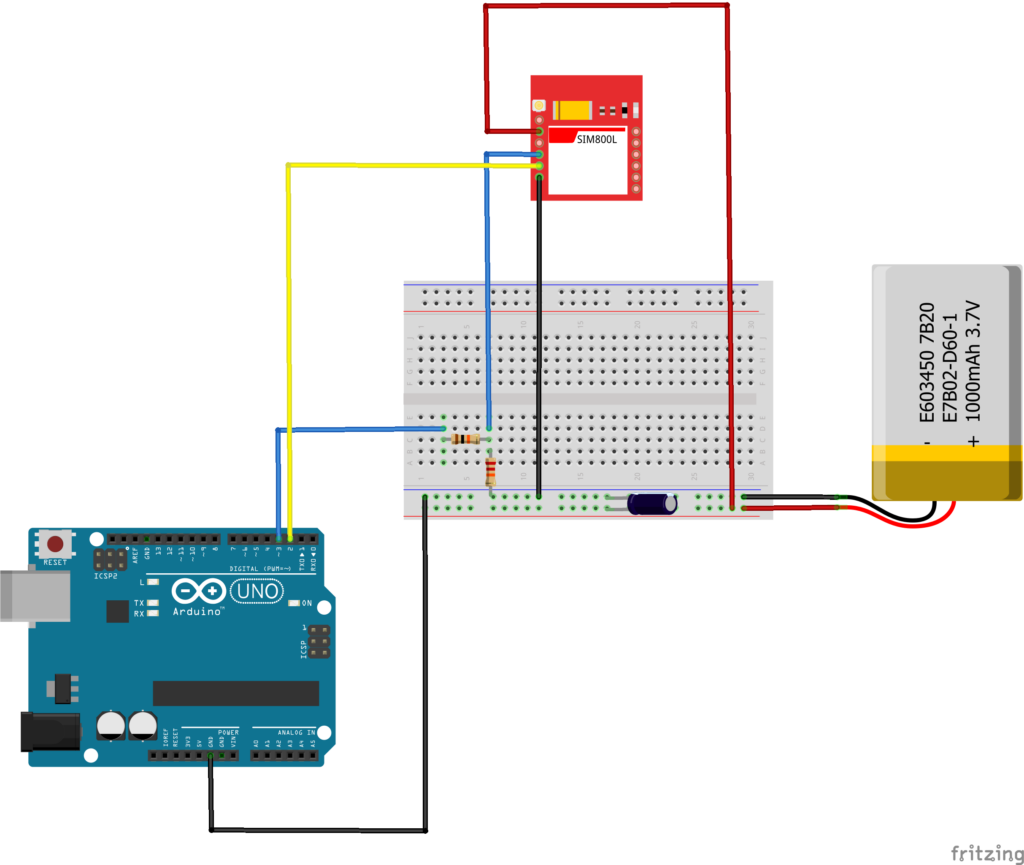

Learn how to connect mobile sensors to the internet using a GSM module and Arduino.

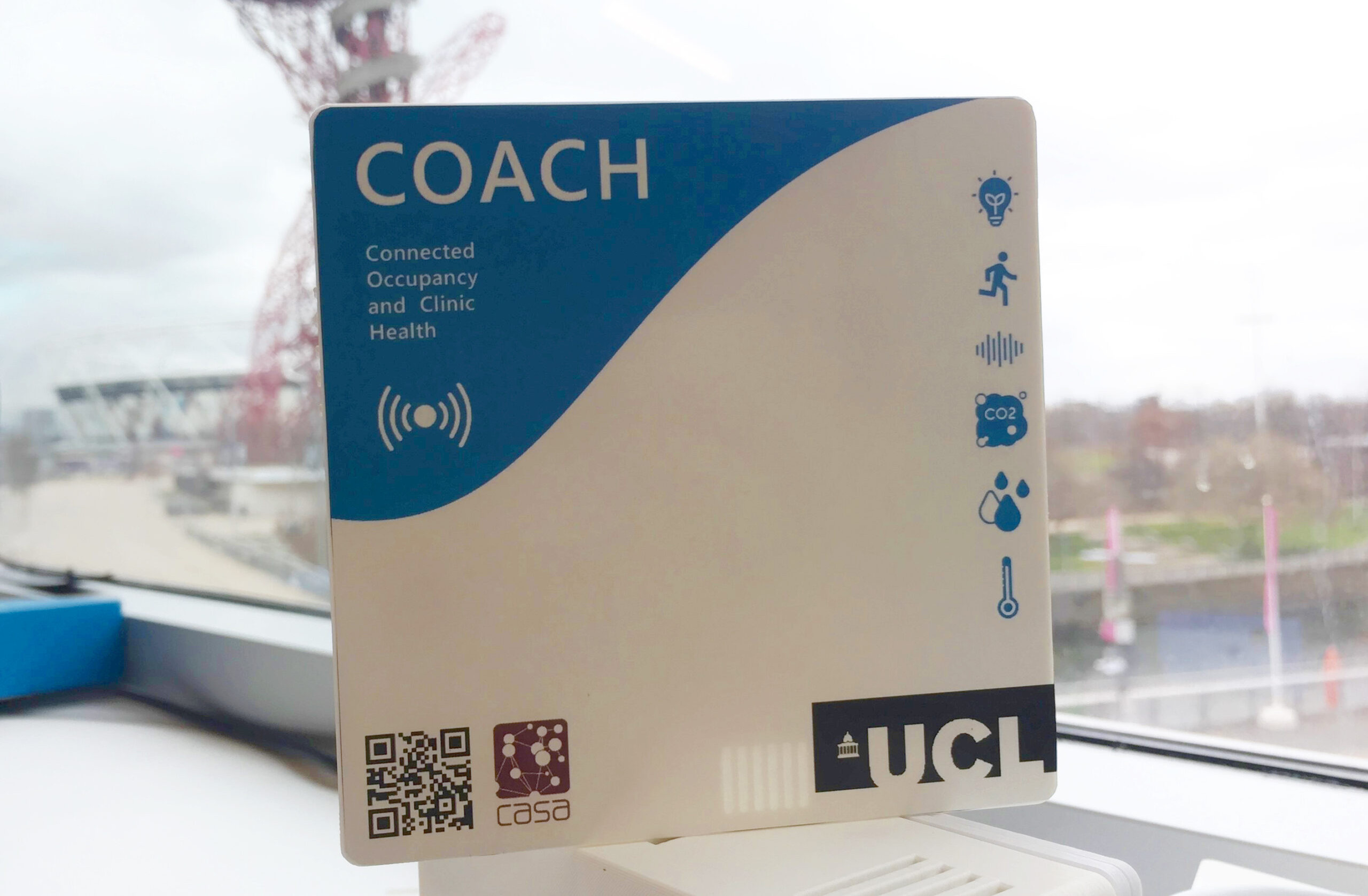

In-depth designing and manufacturing steps of COACH devices to monitor environmental factors like occupancy and CO₂, optimising patient flow and clinic operations at Moorfields Eye Clinic.

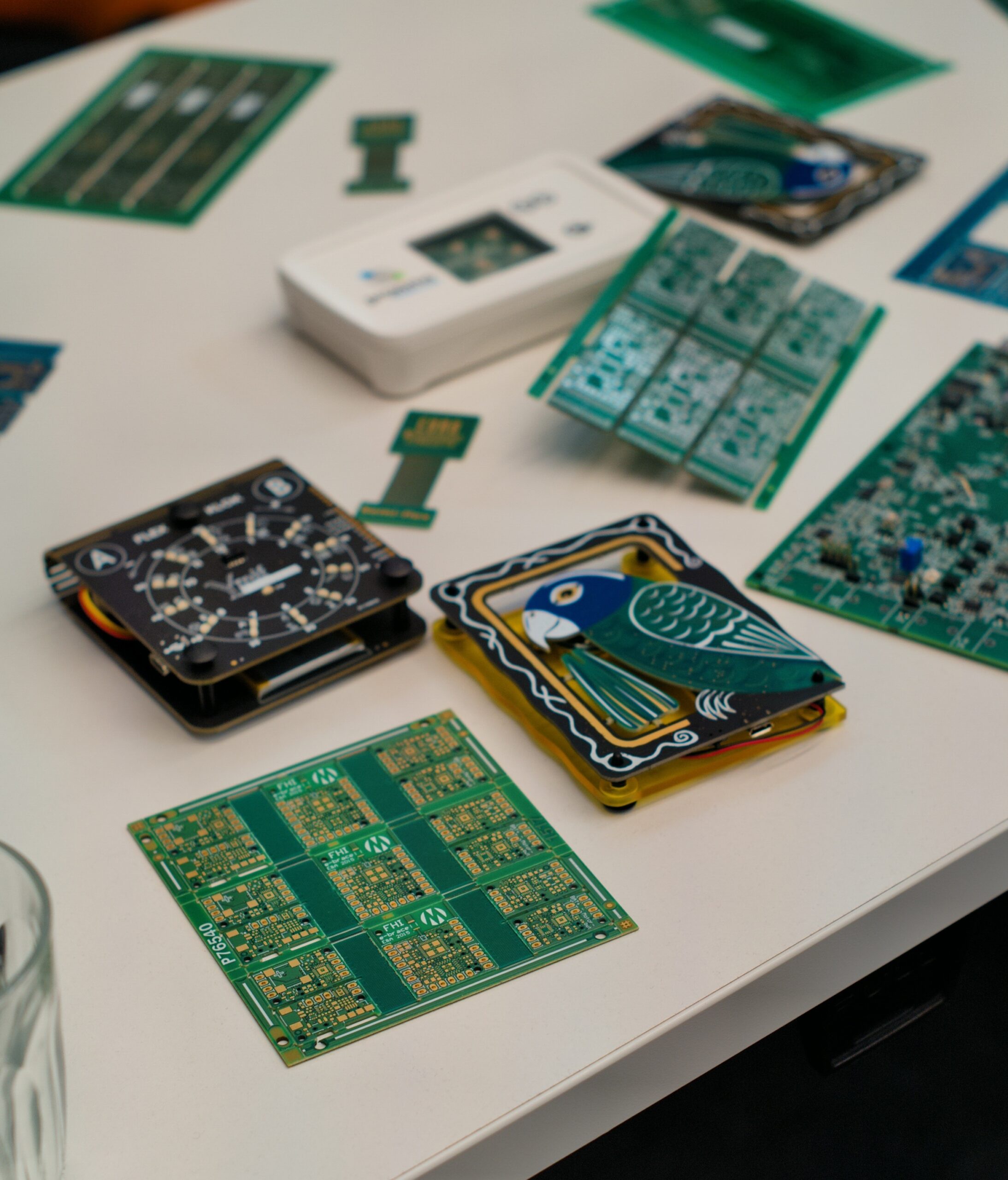

A hands-on look at the 2024 Pro2 Summer School in Lancaster, exploring how to turn prototypes into production-ready devices in just 72 hours.