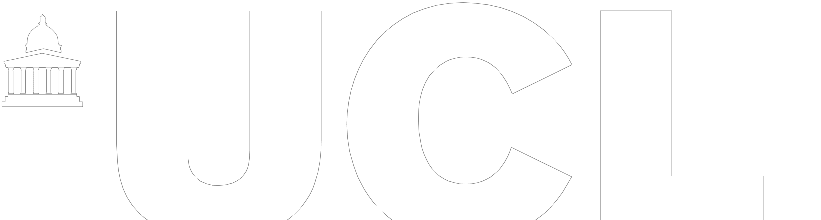

The Connected Environments team have been working alongside industry partners Mace, 3D Repo, eviFile and Mission Room, and academic partners Imperial College London, to develop the AEC Production Control Room (AECPCR). The AECPCR employs site-monitoring and visualisation technologies to improve the management and delivery of largescale construction projects.

Since deploying the Mission Room screens in both demonstration and live project environments, the Connected Environments team has been testing ways to monitor the screens to understand how people interact with the interfaces. We’re hoping to use quantitative and qualitative methods to track these interactions and continue developing the control room technology in ways that maximise its value and impact.

Before looking at quantitative measurements, we observed the weekly look-ahead meetings at Mace’s Paddington Square development to understand how the newly introduced interfaces were being used on-site. By monitoring these meetings, we garnered rich real-time feedback about how different parties, like contractors or site directors, interact with and utilise the Mission Room setup. This ethnographic approach also allowed us to work with our partners to incorporate user feedback as users suggested improvements.

In order to support our qualitative analysis, we are also exploring a number of quantitative analyses. We first looked at utilising screen capture software to understand where users were looking and interacting with the content being published on the three large Mission Room displays. We used InputScope, an open-source application that captures keyboard and mouse movement. InputScope provides both visualisations and critical summary statistics but also captures the data in a form that can be further analysed alongside other datasets. The image below highlights InputScope visualisation outputs.

A second approach was to use computer vision to track and count the number of people in front of the screen. We first used an OpenMV camera and Raspberry Pi trained to detect faces. As described by OpenMV, the OpenMV H7 Plus camera is “a small, low power, microcontroller board which allows you to easily implement applications using machine vision in the real-world”. We implemented a face detection algorithm (similar to the Haar Cascade we used here) which provided ok results but struggled to detect people that were not directly in front of the camera. Since people interacted with the screen even when not directly facing it, we decided face detection was not the best way to measure engagement. To improve the accuracy of the counts we moved to a person detection approach utilising a more powerful camera – the OAK-1 from Luxonis – which runs the computer vision algorithms on dedicated hardware on the camera.

The unique thing about both these solutions is their ability to perform AI on the edge. Both cameras perform the respective face and person counting activities on the devices themselves which makes them different from the more traditional AI solutions uses you see deployed via a webcam. This was chosen because this preserved privacy for us and the people being counted. No footage is actually stored anywhere and the Raspberry Pi strictly acts as a gateway storing the respective anonymised counts without any imagery.

Developing a system that “just works” in the construction site environment provided many interesting and sticky challenges to overcome. Firstly, when talking about IoT devices we typically assume they are connected to the internet. The control rooms we are observing are typically in site offices or the basement of buildings that are under construction. As such, much of the infrastructure (power and internet) is more basic than you might expect. The solution deployed had to run continually, recover after power outages and work with intermittent network access. Like many simple AI projects, getting a demo working proved to be half the effort, the other half was engineering a solution that would deal with things like unexpected camera feed drop out. Work in progress can be found on the GitHub link here.

So what are we learning? Implementing these designs have made us think critically about whether people or faces more accurately reflect engagement with the interfaces. While for now, we’ve chosen a person detection model, this is still an ongoing debate. Beyond that more theoretical discussion, we also had to contend with challenges like finding the optimal position for the camera in an ever-changing meeting room and obstacles such as people or items blocking the camera. A test image of the face detection model taken on-site is below.

The next steps for us are twofold. Firstly, we want to integrate both types of quantitative data in a better way to build a more compelling narrative using the screen capture technology alongside the person detection data. Secondly, we’ll continue to fine-tune the person capture model to optimise it for the current room on-site while also safeguarding that it can be easily changed and updated for new environments.

Stay tuned for future improvements!